Meta introduces SeamlessM4T, an all-in-one multimodal and multilingual AI translation model

Meta yesterday introduced SeamlessM4T, the first all-in-one multimodal and multilingual AI translation model that allows users to communicate without noticing language barriers, Turanews.kz reports.

SeamlessM4T is currently available to researchers and developers under an appropriate license. The metadata of SeamlessAlign, the largest open dataset for multimodal translation, comprising 270,000 hours of learned speech, has also been published.

Creating a universal language translator like the fictional “Babylon” from Douglas Adams’ The Hitchhiker’s Guide to the Galaxy novels is no easy task. Existing speech-to-speech and speech-to-text systems cover only a small fraction of the world’s languages.

SeamlessM4T is based on the achievements of researchers around the world over the years in an effort to create a universal translator. Compared to separate model approaches, SeamlessM4T’s unified system approach reduces errors and delays, improving the efficiency and quality of the translation process.

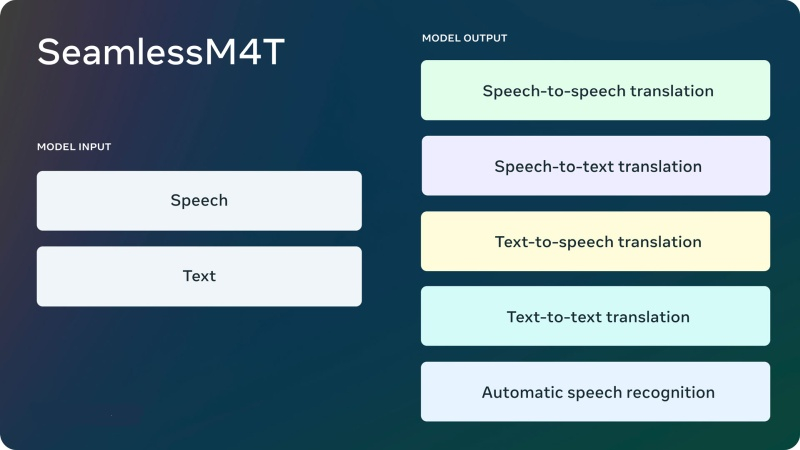

SeamlessM4T builds on the results of all these projects, providing multilingual and multimodal translation based on a single model built on a wide range of oral data sources with state-of-the-art results. SeamlessM4T supports:

- Speech recognition in almost 100 languages;

- Speech to text conversion for almost 100 input and output languages;

- Speech-to-speech, support for almost 100 input languages and 36 (including English and Russian) output languages;

- Text translation into almost 100 languages;

- Text-to-speech, support for almost 100 input languages and 35 (including English and Russian) output languages.

SeamlessM4T is the next step in researchers’ efforts to create an AI-powered technology that will help connect people who speak different languages. Learn more about SeamlessM4T on the Meta AI blog.